As part of Connected Exeter my challenge was to convert a webpage into music:

Music Machines

What does a webpage sound like?

"We will be using Raspberry Pi computers, python code and midi sound modules to convert data from webpages into music.

The Raspberry Pi is a low cost, credit-card sized computer that enables people of all ages to explore computing,

and to learn how to program in languages like Scratch and Python.

It has the ability to interact with the outside world, and has been used in a wide array of digital maker projects,

from music machines and parent detectors to weather stations and tweeting birdhouses with infra-red cameras.

If you have a midi module, keyboard or USB interface please bring it / them along.

Saturday 10am-5pm, Sunday 10am-1pm"

On Saturday I was based at the Phoenix mostly in the black box but in the auditorium later on.

On Sunday I was at the CCI building at Exeter College.

I used a Raspberry Pi connected to a usb midi interface connected to a Roland PMA-5.

I used python's urllib library to read a webpage (in this case the Connected Exeter page),

and then convert the characters into numbers.

The numbers were added to a list that was used to generate music within certain parameters.

The list contained over 20000 numbers.

The first piece uses the numbers in the order they are read

played back one after the other with a delay of 0.1 second between each note

using the piano sound from the midi module.

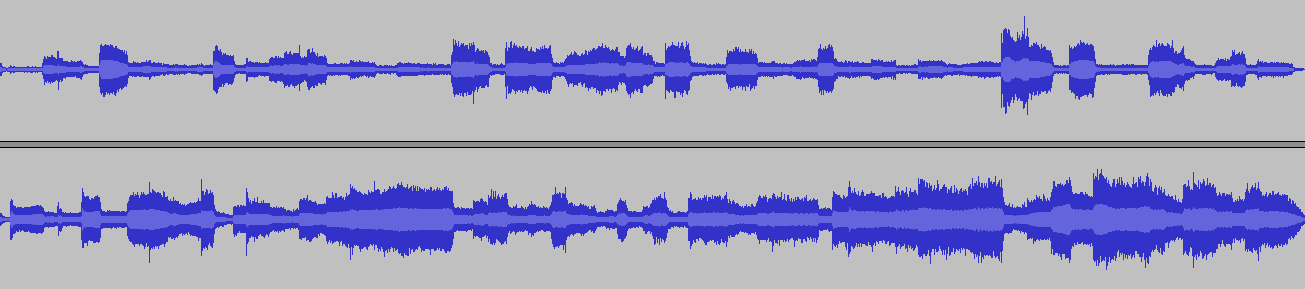

The second piece produces chords from the notes rather than a stream of single notes.

The computer chooses how many notes will be in each chord (a choice from 1 to 6).

There is also the option to not play a chord at all but remain silent.

The volume and position in the stereo field of each note of the chord is decided by taking a number chosen from the list.

The delay between each chord is also decided by taking a number from the list.

There is a chance that some notes from the chord will be sustained.

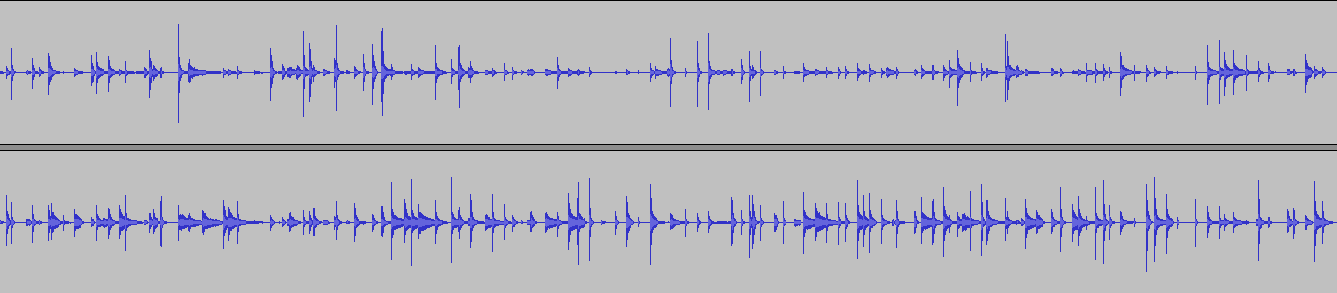

The third piece is similar to the second but with the delay between each event shortened.

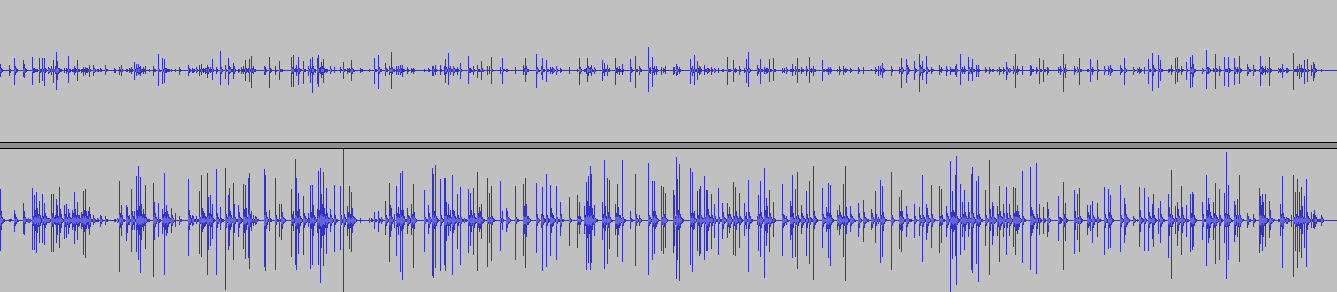

The fourth piece is similar to the third but uses the list to generate the sound for each note

rather then using solely the piano.

The fifth piece uses the principles already established but uses only one sound,

in this case one that is capable of sounding continously rather than fading away.

The sixth piece returns to the piano sound and shortens the delay in between each event.

The seventh piece uses percussive sounds.

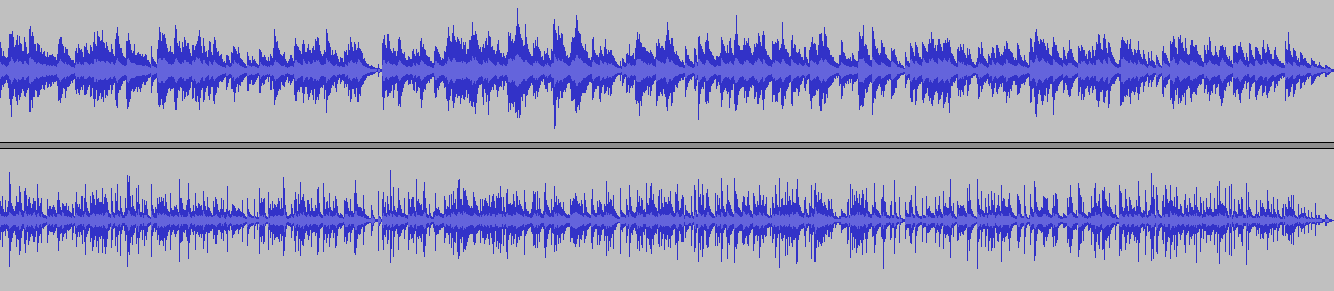

The eighth piece uses the piano sound but here the delay between events is much shorter

and the stereo field reflects how a piano sounds,

in other words low notes are heard from the left and high notes from the right.

home downloads 1 downloads 2 background links donate contact

The Music Machine software is intended for private use only. It is not to be used in public or for commercial use without permission